Dear readers,

We’re gradually migrating this curation feature to our Weekly Newsletter. If you enjoy these summaries, we think you’ll find our Substack equally worthwhile.

On Substack, we take a closer look at the themes from these curated articles, examine how language shapes reality and explore societal trends. Aside from the curated content, we continue to explore many of the topics we cover at TIG in an expanded format—from shopping and travel tips to music, fashion, and lifestyle.

If you’ve been following TIG, this is a chance to support our work, which we greatly appreciate.

Thank you,

the TIG Team

No psychiatric treatment has attracted quite as much cash and hype as psychedelics have in the past decade. Articles about the drugs’ surprising results—including large improvements on depression scores and inducing smokers to quit after just a few doses—earned positive coverage from countless journalists (present company included). Organizations researching psychedelics raised millions of dollars, and clinicians promoted their potential to be a “new paradigm” in mental-health care. Michael Pollan’s 2018 psychedelics book, How to Change Your Mind, became a best seller and a Netflix documentary. Psychedelics were made out to be a safe solution for society’s most challenging mental-health problems.

But the bubble has started to burst: It’s been a bad year for fans of psychedelics.

A few months ago, two articles appeared, one in The New York Timesand another in Business Insider, that portrayed major figures in psychedelics research as evangelists whose enthusiasm for the drugs compromised the integrity of their findings. In August, the FDA rejected the first application for therapy assisted by MDMA, the drug commonly known as ecstasy, saying that it “could not be approved based on data submitted to date,” according to the company that brought the application, Lykos. And five people, including two doctors, were recently charged in the death of the Friends actor Matthew Perry, who was found unconscious in his pool after he took large doses of the psychedelic ketamine. (Three of the five have reached plea agreements; the other two pleaded not guilty.)

These incidents, though unrelated, point to a problem for psychedelic research: Many of the studies underpinning these substances’ healing powers are weak, marred by a true-believer mentality among its researchers and an underreporting of adverse side effects, which threatens to undermine an otherwise bright frontier in mental-health treatment.

Psychedelics are by nature challenging to research because most of them are illegal, and because blinding subjects as to whether they’ve taken the drug itself or a placebo is difficult. (Sugar pills generally do not make you hallucinate.) For years, scientific funding in the space was minimal, and many foundational psychedelic studies have sample sizes of just a few dozen participants.

The field also draws eccentric types who, rather than conducting research with clinical disinterest, tend to want psychedelics to be accepted by society. “There’s been really this cultlike utopian vision that’s been driving things,” Matthew W. Johnson, himself a prominent psychedelic researcher at Sheppard Pratt, a mental-health hospital in Baltimore, told me.

Read the rest of this article at: The Atlantic

Celebrating its 30th anniversary on Oct. 14, “Pulp Fiction” has left a massive footprint on moviemaking.

Originally conceived as an anthology by writer-director Quentin Tarantino and his longtime friend, collaborator and Video Archives coworker Roger Avary, the film evolved into a funny, violent, endlessly inventive, non-linear odyssey. In addition to reviving the career of John Travolta, minting a star in Samuel L. Jackson and spawning a still-thriving cottage industry of knockoffs and imitation films, “Pulp” earned the 1994 Cannes Film Festival Palme d’Or, seven Academy Award nominations and one win (for Tarantino and Avary’s screenplay), while its commercial success ($213 million off of an $8.5 million budget) forever changed the economics of independent cinema.

To commemorate the legacy and impact of “Pulp Fiction,” Variety spoke with more than 20 members of the film’s cast and crew to solicit their experiences and recollections. Armed with more than 100 pages of interviews, we’ve elected to break down this retrospective into two sections. This article covers the film’s conception and its release, and another will delve into the nuts and bolts of the production itself.

Roger Avary, cowriter, story: The original idea for “Pulp Fiction” was, we’re going to make three short films with three different filmmakers. I’m going to make one, Quentin’s going to make one and we had a pal, Adam Rifkin, who was going to make one. I wrote a script called “Pandemonium Reigns,” and along the way, my little short film expanded into a feature-length script. “Reservoir Dogs” expanded into a feature-length script. Adam just never wrote his, and “Pulp Fiction” for a while was something that wasn’t going to happen.

Danny DeVito, executive producer: Stacey Sher knew Quentin, and she set up a meeting for us. After about six minutes of talking with Quentin, I said, “I want to make a deal right now.” There was a little Quentin pause, and he said yes. And I made a deal with him. I hadn’t seen “Reservoir Dogs” yet because it was still being made.

Avary: And then Quentin does “Reservoir Dogs” and he’s getting all sorts of offers to do really cool studio projects. But he basically came back and called me one day and said, “I keep thinking about ‘Pulp Fiction,’ and I think I want to make it as one movie and direct it all myself.” So we took my script [to “Pandemonium Reigns”] and we collapsed it back down, and then we went to Amsterdam and we took all the scenes that we’d ever written that hadn’t been already put into movies. And out came eventually “Pulp Fiction.”

Read the rest of this article at: Variety

Why Celebrities Stopped Being Cool

When I was growing up, “cool” was everything. The musicians, actors, writers, and talking heads we idolized and tried to imitate were outspoken, often on drugs, and sometimes straight-up bad people. I recognize that it was a simpler time before social media and stan culture. People of note could do as they pleased without much risk of blowback—but now, in our sanitized world, cool has become almost meaningless. Playing it safe is the top priority for some of our most prominent and influential artists.

Lately, my friend Lauren Sherman has been chronicling the discussion around the baffling outfits of Taylor Swift and her BFF Blake Lively in her Puck newsletter Line Sheet. Swift has never been a fashion icon. Not working with major designers is unheard of for someone of her stature. But I agree with the prevailing theory, advanced by Sherman and many others, that it is all done intentionally. Ultra high fashion and advanced-stylist-collab looks signify wealth and interfere with the illusion that the wearer is Just Like Us, stepping out each day in whatever’s clean and close at hand.

Read the rest of this article at: GQ

OpenAI announced this week that it has raised $6.6 billion in new funding and that the company is now valued at $157 billion overall. This is quite a feat for an organization that reportedly burns through $7 billion a year—far more cash than it brings in—but it makes sense when you realize that OpenAI’s primary product isn’t technology. It’s stories.

Case in point: Last week, CEO Sam Altman published an online manifesto titled “The Intelligence Age.” In it, he declares that the AI revolution is on the verge of unleashing boundless prosperity and radically improving human life. “We’ll soon be able to work with AI that helps us accomplish much more than we ever could without AI,” he writes. Altman expects that his technology will fix the climate, help humankind establish space colonies, and discover all of physics. He predicts that we may have an all-powerful superintelligence “in a few thousand days.” All we have to do is feed his technology enough energy, enough data, and enough chips.

Maybe someday Altman’s ideas about AI will prove out, but for now, his approach is textbook Silicon Valley mythmaking. In these narratives, humankind is forever on the cusp of a technological breakthrough that will transform society for the better. The hard technical problems have basically been solved—all that’s left now are the details, which will surely be worked out through market competition and old-fashioned entrepreneurship. Spend billions now; make trillions later! This was the story of the dot-com boom in the 1990s, and of nanotechnology in the 2000s. It was the story of cryptocurrency and robotics in the 2010s. The technologies never quite work out like the Altmans of the world promise, but the stories keep regulators and regular people sidelined while the entrepreneurs, engineers, and investors build empires. (The Atlantic recently entered into a corporate partnership with OpenAI.)

Despite the rhetoric, Altman’s products currently feel less like a glimpse of the future and more like the mundane, buggy present. ChatGPT and DALL-E were cutting-edge technology in 2022. People tried the chatbot and image generator for the first time and were astonished. Altman and his ilk spent the following year speaking in stage whispers about the awesome technological force that had just been unleashed upon the world. Prominent AI figures were among the thousands of people who signed an open letter in March 2023 to urge a six-month pause in the development of large language models ( LLMs) so that humanity would have time to address the social consequences of the impending revolution. Those six months came and went. OpenAI and its competitors have released other models since then, and although tech wonks have dug into their purported advancements, for most people, the technology appears to have plateaued. GPT-4 now looks less like the precursor to an all-powerful superintelligence and more like … well, any other chatbot.

Read the rest of this article at: The Atlantic

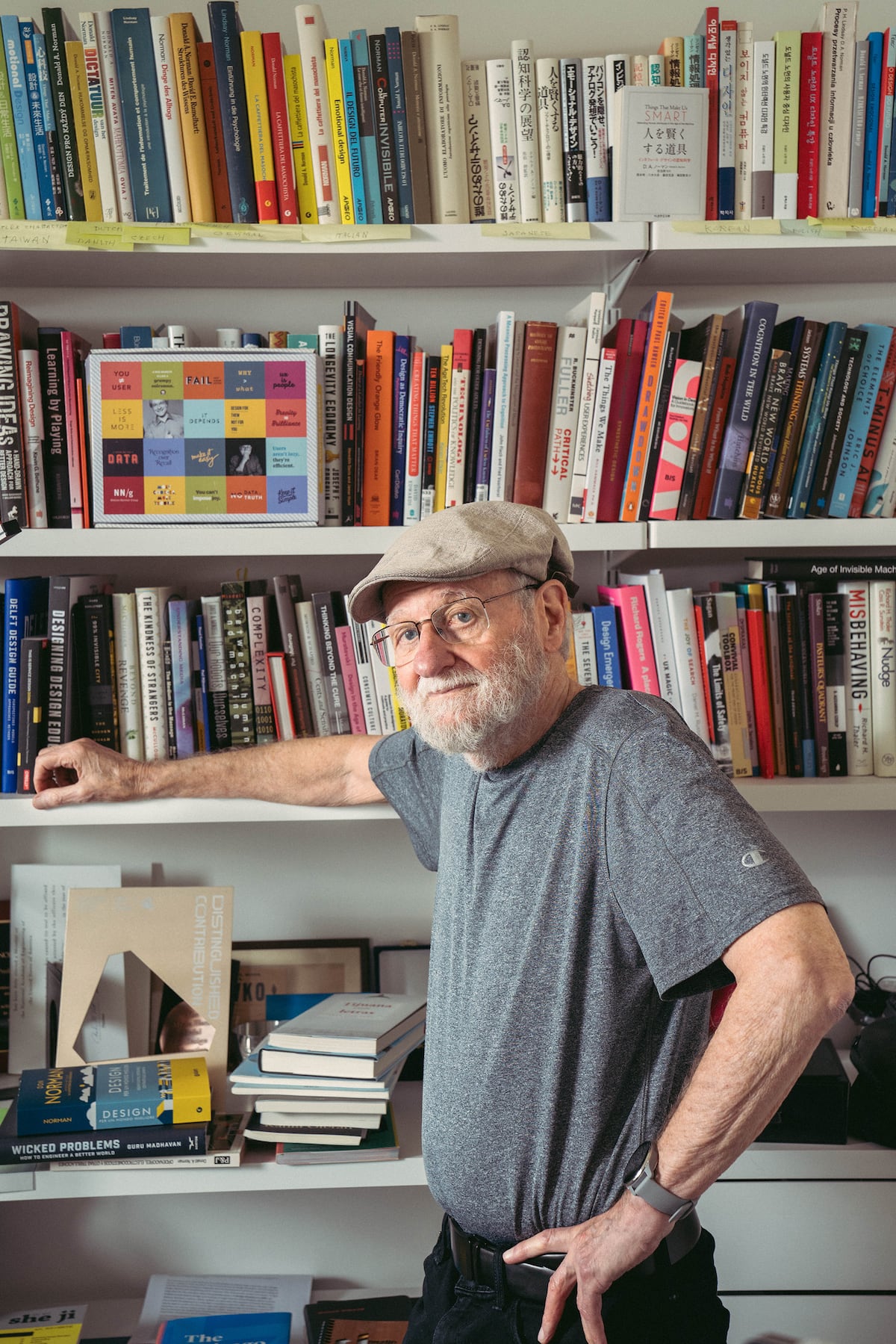

Don Norman is a man with a beard and a prophet’s frustration, a design superstar. He became an Apple vice president at a time when the company seemed destined for ruin and left when it was well on the route to becoming an empire. While he counts contributing to the design of the iPhone itself among his many achievement, today he is almost incapable of picking up one.

“Which way am I supposed to hold this device?” he growls at the vertical Zoom through which he speaks to us from his home in San Diego. “It’s almost impossible to hold it without your finger hitting the screen, and if you touch the screen, you can do something you didn’t mean to.” That something, depending on which apps one has open, might involve calling someone you don’t want to call, sending a sticker to a work chat or taking a beautiful, out-of-focus photo of the wires curled underneath the television, a passer-by’s ankle or whatever else happens to be directly in front of the phone.

“Apple computer used to be famous for the fact that you wouldn’t even need a manual. You could just pick up the telephone or plug in the computer and in seconds, you could use it and learn. It was self-explanatory,” says Norman, with the kind of fluid speech that can only come from decades of university teaching. “But unfortunately, the designers who care only about aesthetics and beauty have taken over. And I also blame the journalists who have said that the iPhone screen should be as big as possible, with no boundary [and that the center button that pre-2017 models featured should disappear]. Because when the telephone rings, I can no longer answer the phone.”

In case there was any doubt as to whether the matter was settled, Norman continues: “What happened was that Apple fell prey to the disastrous part of design, which is that design is about making something beautiful and elegant. And I say, nonsense, that’s not what my kind of design is about. My kind of design is — sure, I want it to be attractive and I want it to be nice, but more important than anything else is that I know I can use it freely and that it’s easy to learn and that it doesn’t keep changing. Apple believes that words are ugly, they try not to use them, and you have to memorize all these gestures, up and down, left and right, one finger, two fingers, three fingers, one tap, two taps, a long tap, starting from the top of screen, the middle of the screen. Who can remember that?”

Here, Norman, 88, lays bare certain essential details pertaining to his persona. He is a man with a very clear notion of the function that an object’s design must achieve, an idea so clear and so powerful that it’s practically a way of understanding the world. He likes to expound on the theory in long, astute soliloquies, the likes of which have filled hours upon hours of university classes, from Harvard to Stanford and most of the academic institutions in between.

Read the rest of this article at: El Pais