Here is a very dumb truth: for a decade, the default answer to nearly every problem in mass media communication involved Twitter. Breaking news? Twitter. Live sports commentary? Twitter. Politics? Twitter. A celebrity has behaved badly? Twitter. A celebrity has issued a Notes app apology for bad behavior? Twitter. For a good while, the most reliable way to find out what a loud noise in New York City was involved asking Twitter. Was there an earthquake in San Francisco? Twitter. Is some website down? Twitter.

The sense that Twitter was a real-time news feed worked in both directions: people went on Twitter to find out what was going on, and reporters, seeing a real-time audience of people paying attention to news, started talking directly to those people. Twitter knew this and played right into it. In 2009, co-founder Biz Stone wrote that the platform had become a “new kind of information network” and that the prompt in the tweet box would now be, “What’s happening?”

“Twitter helps you share and discover what’s happening now among all the things, people, and events you care about,” he wrote. In short, Twitter was for the news.

In the heady days of the millennial media startup boom of the 2010s, the sense that large social platforms would pay publishers for content and create a new class of lucrative digital media outlets was pervasive and unquestioned. And in those early years, no one knew which platforms would succeed. It is almost impossible to believe, but there was a time when Twitter felt like a real competitor to Facebook.

“Twitter was remarkably easy to work with, compared to the other social media platforms,” says New York Magazine editor-at-large Choire Sicha, who once ran platform partnerships for The Verge’s parent company, Vox Media, a role that entailed trying to make the platforms care about journalism enough to pay for it — or at least send enough referral traffic to our websites to make giving it away for free worth it. “Google was mysterious and wizard-behind-the-curtain-y; Snapchat was whiplash central, and every time you came back around, the person you dealt with last had been fired; Facebook was an opaque and officious Death Star.”

Read the rest of this article at: The Verge

“By the way, how are you managing with the 100-copy collection?”

“Huh? What do you mean, the 100-copy collection?”

“The books in the safe. Don’t neglect your library duties. It’d be a disaster if anything leaked to the outside.”

I set off for the library at a run. There were books in that safe? I had no idea. I figured, at best, it would be a stash of treatises by the leaders on literary theory, or else records of secret directives for KWU eyes only. It turned out that the 100-copy collection was where the union stored translated copies of foreign novels and reference books that writers could access.

With the speed of a bank robber, I yanked out my key, turned the lock and opened the safe. Inside, tightly packed together, were nearly 70 translated copies of foreign novels. Seeing them, I crumpled to the floor in shock.

The first title to jump out at me was Seichō Matsumoto’s Points and Lines, a Japanese psychological thriller published in 1970. With growing excitement, I fumbled through the stack. There was Ernest Hemingway’s For Whom the Bell Tolls, O Henry’s The Last Leaf, Alexandre Dumas fils’ The Lady of the Camellias, Takiji Kobayashi’s Crab Cannery Ship, Dante’s Divine Comedy, Giovanni Boccaccio’s Decameron, Victor Hugo’s Les Misérables, Emily Brontë’s Wuthering Heights, Margaret Mitchell’s Gone With the Wind; and, most exciting of all for me, Seiichi Morimura’s Proof of the Man, a Japanese detective novel that tells the story of a manhunt from Tokyo to New York.

I had joined the KWU in the late 1980s. At that time, the only foreign literature ordinary North Koreans could access was that of other socialist nations, chiefly the USSR and China. I had read Russian writers like Maxim Gorky, Anton Chekhov and Leo Tolstoy, as well as the Chinese classic The True Story of Ah Q by Lu Xun. Occasionally, translations of classics like Shakespeare’s works were published. But nobody even dreamed of seeing literature from enemy countries such as the US and Japan.

Some zainichi returnees like myself had brought books from Japan, which we passed around secretly. One of these was Proof of the Man. Upon finding a Korean-language copy in the 100-copy collection, I was struck by the quality of the translation. I later learned that it had been done by a zainichi acquaintance of mine who worked as a translator.

“Those sneaky bastards. If we ordinary citizens were to read this we’d be put away for political crimes, but they get to enjoy it all in secret,” my zainichi friend grumbled when I showed him.

“You can’t tell anyone about this. I’d get arrested.”

“Hey, they don’t have any graphic novels, do they? I’d love to see Golgo 13, Blackjack, or Captain Tsubasa again.”

Having stumbled upon this windfall, I devoured the contents of the 100-copy collection. My favourite was Guy de Maupassant. I was deeply impressed by his short stories The Necklace and Boule de Suif and used them as models for my own work.

Any mismanagement of the 100-copy collection would be prosecuted as a political crime, since it would in effect be distributing capitalist reactionary materials to the public. I don’t understand the logic, but I’ve heard that the Narcotics Control Law deems it a greater crime to sell or transport illegal materials than to consume them.

Read the rest of this article at: The Guardian

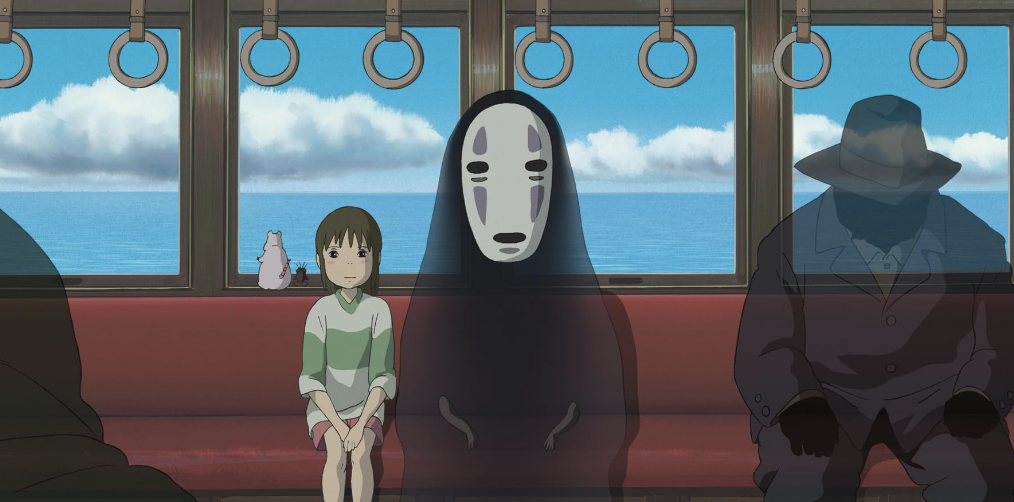

Before the internet, the only way for an American teen to watch anime was illicitly, on bootlegged video cassettes that were passed fan to fan like some visual drug. Each new discovery felt like a tiny revelation, a step into an undiscovered country. The distant realm of Japan was a place I only knew about from my grandfather’s war tales and dark stories on the evening news.

The Eighties was an era of trade frictions between Japan and the West, of bellicose political rhetoric and literal Japan-bashing: American lawmakers gleefully took sledgehammers to Japanese electronics on the steps of Congress. But my friends and I were not deterred, enchanted as we were by animated fantasies so strikingly different from anything in our own culture. And even then, we could tell that there was something different about a certain anime auteur. His name was Hayao Miyazaki.

It is the rare creator who possesses the knack of appealing simultaneously to casual moviegoers, die-hard genre fans and tastemakers alike. Miyazaki’s latest film, The Boy and the Heron, set records when it was released in Japan this year; this weekend, it made history as the first original anime movie to reach number 1 in both American and Canadian cinemas. Influential international devotees include Wes Anderson and Guillermo Del Toro, who took David Cronenberg to a screening of Miyazaki’s beloved ode to childhood, My Neighbor Totoro. And last year, a stage adaptation of Totoro premiered at the Barbican to so much acclaim that it has been revived already.

Read the rest of this article at: Unherd

My friend Hannah, a single mother of two boys, ages 6 and 9, remembers the first time a teacher brought up her older son’s behavior at school. She had left New York City in the midst of his public-school kindergarten year to temporarily hunker down near family during the pandemic. A few months later, she enrolled him in an in-person private school near where they’d relocated. At an introductory playdate there, a teacher, who had been at the school for 40 years, “very nicely said to me, ‘This is a young gentleman who needs to learn some boundaries.’” Now settled back in Manhattan, Hannah recently received a similar note about her younger son, who is in kindergarten. “I met with the school psychologist because I had noticed a lot of disrespect at home,” she said. The psychologist said the same thing as the small-town kindergarten teacher: The kid needed boundaries.

Hannah readily admits that she might be a tad permissive as a parent. She has a hard time holding the line with screen time on school nights and gives in to stop the mind-numbing negotiating her kids have perfected. On a recent visit to a friend’s house, where her kids didn’t want to eat the breakfast that was offered, Hannah marveled as the dad of the house told them there were no other options and they could take it or leave it. “I thought, We can do that?” Hannah laughed. “I am making toast and sausage for one kid, eggs for another, running around.”

She doesn’t name her philosophy as “gentle parenting” or “respectful parenting” or “intentional parenting” — though she’s familiar with these buzzwords. They cloud the air that modern parents, myself included, breathe. Instead, she says she is simply trying to be less punitive than her own parents, to break up the patterns (namely, screaming and spanking) that she and many of her fellow Gen-X parents experienced growing up. According to a new, still unpublished study, this is exactly how parents of young children who are attempting to practice gentle parenting see the movement. Researchers Alice Davidson, Ph.D., a professor of developmental psychology at Rollins College in Winter Park, Florida, and Annie Pezalla, Ph.D., an assistant professor of psychology at Macalester College in St. Paul, Minnesota, asked 100 parents to choose adjectives that describe their own parenting style versus their parents’. “Those who identified as ‘gentle parenting’ parents used more terms to describe their approach: gentle, affectionate, conscious, intentional. All the words are synonymous,” says Davidson. “Whereas they use fewer, more simplistic words to describe their parents,” she says — words like reactive or confrontational. “Even for respondents whose parents showed a lot of love and warmth, they want to do that and then some,” says Davidson.

Read the rest of this article at: The Cut

An academic’s life is none too cinematic. Those employed in the fields of law, medicine, or finance may grumble at onscreen depictions of their trades—while, I imagine, privately preening at the attention—but their jobs are at least worth dramatizing. Who can find narrative momentum in the minutiae of peer review?

One way to make academics seem interesting is to thrust them from the ivory tower and into the world, as seen in films from the European art house to Hollywood. In Ingmar Bergman’s “Wild Strawberries,” a professor of medicine (Victor Sjöström) steps out of his office and into a series of surreal encounters with memory and death. In “Arrival,” the linguist Louise Banks (Amy Adams) has only begun her lecture before class is dismissed on account of extraterrestrial activity. Various onscreen “ologists” have their fun out there in the field: the paleontologist couple from “Jurassic Park”; the Harvard professor of religious symbology from “The Da Vinci Code”; and the blockbuster archeologist Indiana Jones, who began his movie run as a drool-worthy vision in tweed. The latest film in the franchise, released earlier this year, finds the old prof heading into retirement, his most unorthodox maneuver yet.

If a movie professor remains confined to campus grounds, he (it’s almost always a “he,” isn’t it?) must have a reason for doing so, something freakish: genius, say, or madness, or some combination of the two (see: “A Beautiful Mind”). Even then, campus is often a mere waystation. I might be the only one who preferred the first forty-five minutes of Christopher Nolan’s “Oppenheimer” over the latter two and a quarter hours, before the titular physicist is whisked away from academia to turn his ideas into a weapon of mass destruction. Nolan stokes the fantasy of a self-consciously academic environment with all the necessary tableaux—oaken surfaces, chalk dust, the Socratic method—illustrating J. Robert Oppenheimer’s faith in campus life as the center of the universe. (In my experience, virtually every professor on Earth labors under a similar illusion.) During his graduate studies in Europe, young Oppenheimer (Cillian Murphy) is absorbed in the breaking thought of his time, not only in physics but also in literature and the arts. In a montage, we see Oppie in the classroom; Oppie at a museum, in a face-off with Picasso’s “Woman Sitting with Crossed Arms”; Oppie at a chalkboard, at his desk, or turned away from it, smashing wine glasses against parquet floors and observing the scattered aftermath. This is academia as romance, independent study as origin story, and I, for one, was entertained. “Algebra’s like sheet music,” the elder physicist Niels Bohr tells him gravely, adding, “Can you hear the music, Robert?” Cue the strings. Later, as a professor at Berkeley, Oppenheimer works down the hall from Dr. Ernest Lawrence (Josh Hartnett), who, in high-waisted trousers and a variety of vests, gives Indiana Jones a run for his money. Oppenheimer’s classroom is initially near barren, which is to be expected when you’re “teaching something no one here has ever dreamt of.” Then, in a series of shots, we see a crowd gradually form—a star professor is born. (Ominously, the professor is preoccupied with what might happen when stars die.) As “Oppenheimer” tips into the forty-sixth minute, though, our hero takes his leave from the realm of theory and devotes himself to the admittedly far more spectacular imperatives of war.

Read the rest of this article at: The New Yorker