At around 9 A.M. every weekday, a crow caws in the Jardin des Plantes, the oldest botanical garden in Paris. The sound is a warning to every other crow: Frédéric Jiguet, a tall ornithologist whose dark hair is graying around the ears, has shown up for work. As Jiguet walks to his office at the French National Museum of Natural History, which is on the garden’s grounds, dozens of the black vandals take to the trees and rain abuse on him, as though he were a condemned man. “I think I’m the best friend of French crows,” Jiguet told me. “But I am probably the man they hate most.”

Crows are famous for holding grudges. Their beef with Jiguet started in 2015, when the Paris government hired him to study their movements around the city. Farmers blame crows for crop damage, and hunters shoot hundreds of thousands of the birds each year; in Paris, some district managers wanted permission to cull them for tearing into trash bags and digging up lawns. But Jiguet questioned the wisdom of killing so many crows. “It costs a lot to destroy pests,” he remembers thinking. “Can it really be efficient to destroy all these lives?”

Outside his office, Jiguet began baiting net traps with kitchen scraps such as raw eggs and bits of chicken. He removed one bird at a time with his bare hands. Then he stuffed the bird into a cloth tube that he had cut from his daughter’s leggings, to immobilize the bird while he recorded its weight on a scale. Finally, he strapped a colorful ring, which was labelled with a three-digit number, to the bird’s leg.

Eventually, Jiguet erected a metal cage the size of a wood shed in the garden, to trap the birds. Crows could fly in, but they couldn’t escape until Jiguet let them out. Although some of the birds meekly accepted their fates, some pecked at him furiously when he ringed their legs.

Over the years, Jiguet has caught and released more than thirteen hundred crows. He also built a Web site where people could report sightings. These efforts revealed a big shakeup every spring, in which year-old crows flew the coop and looked for new habitats. Some Parisian crows were spotted as far away as the Dutch countryside, but most formed new flocks in the city’s green spaces, where there was garbage to eat. Jiguet convinced the city government that there was no point in trying to kill a park’s crows: after all, new ones would arrive within a year. “This project definitely changed the view of Parisian politicians on crows,” he said. Recently, he published a book about coexisting with crows.

Read the rest of this article at: The New Yorker

When scientists first created the class of drugs that includes Ozempic, they told a tidy story about how the medications would work: The gut releases a hormone called GLP-1 that signals you’re full, so a drug that mimics GLP-1 could do the exact same thing, helping people eat less and lose weight.

The rest, as they say, is history. The GLP-1 revolution birthed semaglutide, which became Ozempic and Wegovy, and tirzepatide, which became Mounjaro and Zepbound—blockbuster drugs that are rapidly changing the face of obesity medicine. The drugs work as intended: as powerful modulators of appetite. But at the same time that they have become massive successes, the original science that underpinned their development has fallen apart. The fact that they worked was “serendipity,” Randy Seeley, an obesity researcher at the University of Michigan, told me. (Seeley has also consulted for and received research funding from companies that make GLP-1 drugs.)

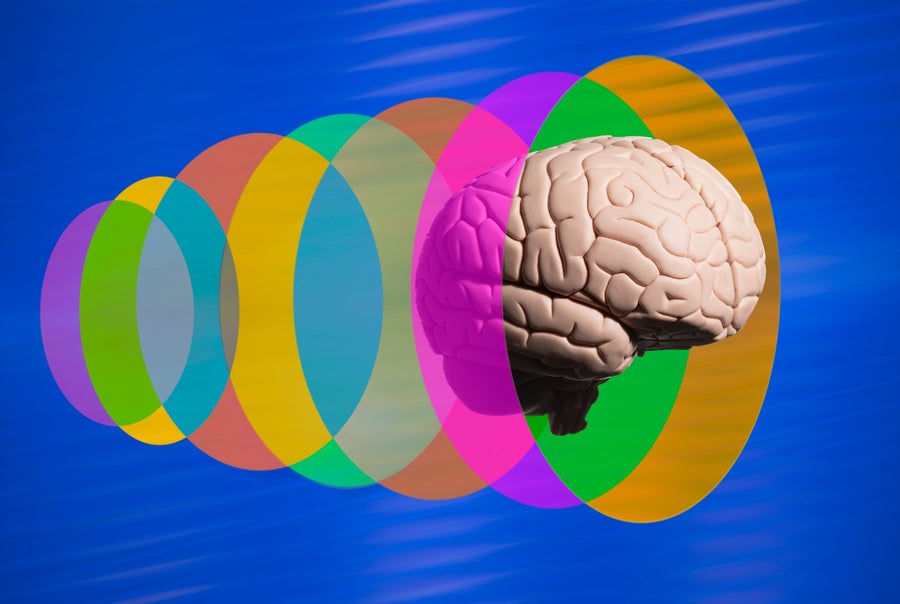

Now scientists are beginning to understand why. In recent years, studies have shown that GLP-1 from the gut breaks down quickly and has little effect on our appetites. But the hormone and its receptors are naturally present in many parts of the brain too. These brain receptors are likely the reason the GLP-1 drugs can curb the desire to eat—but also, anecdotally, curb other desires as well. The weight-loss drugs are ultimately drugs for the brain.

Obesity medications differ in a key way from the natural molecule they’re meant to mimic: They last a lot longer. GLP-1 released in the gut has a half-life of just minutes in the bloodstream, whereas semaglutide and tirzepatide have half-lives measured in days. This is by design. Both drugs were specifically engineered to resist degradation, so that they need to be injected only once a week. (The very first GLP-1 drug on the market, exenatide, had to be injected twice a day when it was released, in 2005—the field has come a long way.) The medications are also given at levels much higher than natural GLP-1 ever reaches in the bloodstream; Seeley tends to put it at five times as high, but he said even that may be a gross underestimate.

By indiscriminately flooding the body with long-lasting molecules, the injections likely allow engineered GLP-1 drugs to penetrate parts of the body that the natural gut hormone cannot—namely, deep in the brain. First-generation GLP-1 drugs including exenatide, which are far less powerful than the current crop, have been shown to cross the blood-brain barrier and tickle areas important for appetite and nausea. Exactly what Ozempic and its successors do is still less clear, but they are so effective that many scientists think they must be reaching far, directly or indirectly.

All of this matters because the brain, as we now know, has its own GLP-1 system, parallel to and largely separate from whatever is going on in the gut. Neurons in the hindbrain, sitting at the base of the skull, secrete their own GLP-1, while receptors listening to them are found throughout the brain. In animal experiments, hitting those receptors indeed suppresses appetite.

Read the rest of this article at: The Atlantic

I arrived at M.I.T. in the fall of 2004. I had just turned twenty-two, and was there to pursue a doctorate as part of something called the Theory of Computation group—a band of computer scientists who spent more time writing equations than code. We were housed in the brand-new Stata Center, a Frank Gehry-designed fever dream of haphazard angles and buff-shined metal, built at a cost of three hundred million dollars. On the sixth floor, I shared an office with two other students, one of many arranged around a common space partitioned by a maze of free-standing, two-sided whiteboards. These boards were the group’s most prized resource, serving for us as a telescope might for an astronomer. Professors and students would gather around them, passing markers back and forth, punctuating periods of frantic writing with unsettling quiet staring. It was common practice to scrawl “DO NOT ERASE” next to important fragments of a proof, but I never saw the cleaning staff touch any of the boards; perhaps they could sense our anxiety.

Even more striking than the space were the people. During orientation I met a fellow incoming doctoral student who was seventeen. He had graduated summa cum laude at fifteen and then spent the intervening period as a software engineer for Microsoft before getting bored and deciding that a Ph.D. might be fun. He was the second most precocious person I met in those first days. Across from my office, on the other side of the whiteboard maze, sat a twenty-three-year-old professor named Erik Demaine, who had recently won a MacArthur “genius” grant for resolving a long-standing conjecture in computational geometry. At various points during my time in the group, the same row of offices that included Erik was also home to three different winners of the Turing Award, commonly understood to be the computer-science equivalent of the Nobel Prize. All of this is to say that, soon after my arrival, my distinct impression of M.I.T. was that it was preposterous—more like something a screenwriter would conjure than a place that actually existed.

Read the rest of this article at: The New Yorker

Mayor John Lindsay declared war on New York’s graffiti in 1972. It was a curious move, even in an era known for unwinnable conflicts. Many residents hated graffiti, of course, but it didn’t lack for fans in high places. The previous year, the Times had published an admiring profile of Taki, a teen-ager who scribbled his tag, TAKI 183, on walls and subway cars across the five boroughs. In 1974, Norman Mailer wrote a long essay for Esquire in which he compared Taki et al. to van Gogh. But the Mayor had spoken, and for the rest of the seventies the M.T.A. spent millions of dollars keeping the trains gray, which mainly seemed to encourage people to gussy them up again. By 1982, the year of Keith Haring’s career-making solo show at the Tony Shafrazi Gallery, there was nothing groundbreaking about the idea that graffiti could be real art.

What was still novel was the idea that graffiti could sell for real money. At the end of the summer, the stock market began its famed five-year sprint, yanking the art market behind it. A newly loaded clientele went south of Fourteenth Street, where the principal crops included cocaine, rancid apartments, and most of the worthwhile culture within the city limits. In a single year, more than twenty galleries sprouted in the East Village alone. There were already spaces where graffiti artists could display their work—Fashion Moda, in the South Bronx, was the best known—but not for these prices. Between 1980 and 1982, Haring filled subway stations with hundreds of chalk drawings of babies, U.F.O.s, dogs, and televisions; for his solo show, he covered Shafrazi’s walls in the same sorts of images. Within a few days of the opening, he had sold around a quarter of a million dollars’ worth of work. If you bought something, you were buying graffiti, but a special kind that you could hang in your home, regardless of whether you cared to see it on your block.

A handball court in Harlem; a candy store on Avenue D; the Fiorucci boutique by the Piazza del Duomo, in Milan; the Dupleix Métro station, in Paris; the Berlin Wall; Grace Jones—for much of the eighties, it seemed that Haring’s mission was to coat every square inch of the planet in his pictures, and that he might someday succeed. There were arrests and court summonses along the way, but they got rarer as he got more famous. (In 1984, another New York mayor, Ed Koch, thanked him for his public service.) A “CBS Evening News” segment on Haring, which aired shortly after the Shafrazi opening and was seen by some fourteen million people, presents his subway art as the creations of a precocious kid. He looks the part—twiggy frame, wire-rimmed glasses—and sounds it, too, explaining his pictures with the shy earnestness of someone a few years away from discovering self-doubt. “They come out fast, but, I mean, it’s a fast world,” he says. His voice is so flat that he could be doing a bit.

The only bit, the segment reveals, is that there is none. Haring does work fast, sometimes finishing dozens of drawings in a day, always of things everybody knows. He attracts onlookers but barely acknowledges them—as long as he’s working, he barely hesitates at all. Muscle memory is his muse. In the years leading up to his death, from complications due to AIDS, at the age of thirty-one, the crowds kept getting bigger, but his process stayed the same: steady hand, monastic concentration. To go on YouTube and watch Haring perform is weirdly gripping, so much so that the residue of performance he left behind, otherwise known as images, can’t help but let you down.

Read the rest of this article at: The New Yorker

When a young woman earning her Ph.D. in biostatistics came to see psychiatrist Michael Gandal with symptoms of psychosis, she became the fifth person in her immediate family to be diagnosed with a neurodevelopmental or psychiatric condition—in her case, schizophrenia. One of her brothers is autistic and another has attention deficit hyperactivity disorder (ADHD) and Tourette syndrome. Their mother has anxiety and depression and their father has depression.

Gandal has seen this pattern before. “If one person, say, has a diagnosis of schizophrenia in their family, not only are other people in the extended family more likely to have diagnosis of schizophrenia but they are also more likely to have a diagnosis of bipolar disorder, autism or major depression,” Gandal says. This propensity runs in families.

The latest edition of the Diagnostic and Statistical Manual of Mental Disorders (DSM), the mental health field’s diagnostic standard, describes nearly 300 distinct mental disorders, each with its own characteristic symptoms. Yet increasing evidence suggests the lines between them are blurry at best. Individuals with mental disorders often have symptoms of many different conditions, either simultaneously or at different times in their lives. What’s more, as the family patterns suggest, the genes linked with these conditions overlap. “Everything is genetically correlated,” says Robert Plomin, a behavioral geneticist at King’s College London. “The same genes are affecting a lot of different disorders.”

In fact, it’s difficult to find specific causes of any sort, be they genetic or environmental, for individual psychiatric disorders, says Duke University psychologist Avshalom Caspi. The same is not true of most neurological disorders, such as epilepsy or multiple sclerosis. Those conditions seem to have a distinct genetic and biological origin, in contrast to the “deeply interconnected nature for psychiatric disorders,” according to findings published in 2018.

Put another way, scientists say there exists a propensity to develop any of a range of psychiatric problems. They call this predisposition the general psychopathology factor, or p factor. This shared tendency is not a minor contributor to the extent to which someone develops symptoms of mental illness. In fact, it explains about 40 percent of the risk.

The concept is akin to general cognitive ability, or g, which predicts scores on tests of skills such as spatial ability or verbal fluency. And it suggests that what joins mental health conditions is at least as important as what divides them. The concept of p, Caspi says, “is almost a clarion call for focusing on what is common rather than being preoccupied with what is distinct.”

A few researchers are calling for the erasure of hard boundaries between psychiatric conditions, which could have dramatic consequences for both the diagnosis and treatment of these disorders. “I think this will be the end of diagnostic classification schemes,” Plomin says.

Although that is not likely to happen soon, given that doctors and insurers rely on the DSM’s diagnostic codes, researchers have proposed alternative schemes that are more in line with the concept of p. At the very least, some experts say, mental health research, including clinical trials of treatments, should break free of its DSM silos and encompass multiple diagnoses. “We should be looking at psychopathology without the blinkers of the DSM,” says Patrick McGorry, a psychiatrist and professor of youth mental health at the University of Melbourne in Australia.

Read the rest of this article at: Scientific American