During the summer of 2022, a troubling suspicion began to take root in my mind. It was June, and ever since I’d left my news blogging job the previous year, the compulsion I’d once felt to stand stalwart and hypnotized at the gates of global content mills — which for some time had been as much a matter of personal curiosity as professional necessity — had eased considerably. I went on walks instead, and began to forget the cackling cadence of New York Post headlines. My friends seemed to appreciate filling me in. The Depp v. Heard trial, which once I would have not so much monitored as melted down, sifted for odd parts, and reconstituted as a dozen miniscule pieces of writing, barely registered. Then I started receiving emails about the trial from, of all people, Mary Gaitskill: the novelist, essayist, and short-story writer whose work I much revere and had often returned to during that recently departed decade, my twenties, when I was writing those miniscule articles, sensing powerfully the expectations and opinions of others, forming brief, enigmatic friendships with women I met through work — in short, exhibiting some classic Gaitskill-character behavior.

The author had just begun writing a Substack newsletter called “Out of It,” in which she qualified her scattered thoughts about the trial with admissions that she was “becoming incoherent,” that she was “trying to say something simple” and didn’t know why it should be so hard. The analysis itself was largely character driven and observational: Johnny Depp struck her as “a person with a lot of potent cruelty in him,” whereas she saw Amber Heard as “a weak person capable of weak cruelty.” Sometimes she would meander guilelessly toward free association, playing the part of a gregarious friend who’s more interested in connecting with her audience and having a good time than in handing down judgements.

Read the rest of this article at: The Drift

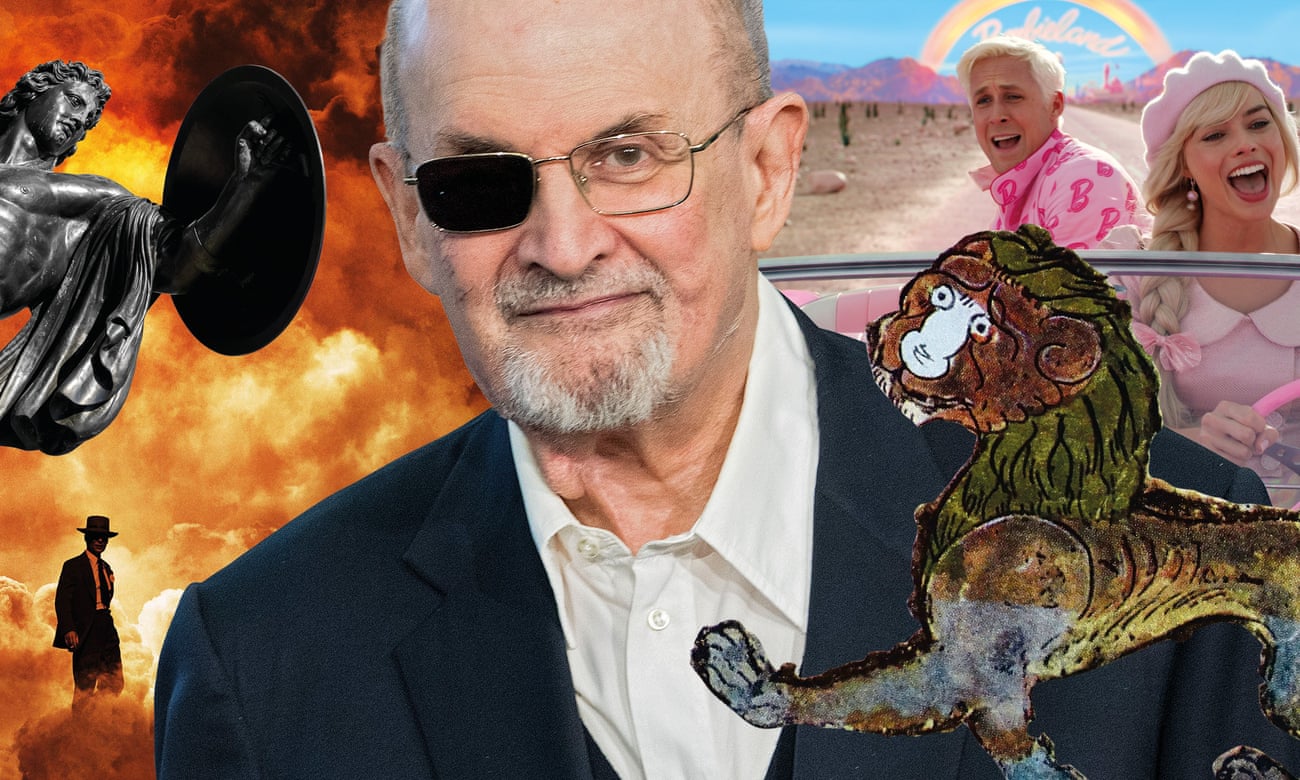

To begin with, let me tell you a story. There were once two jackals: Karataka, whose name meant Cautious, and Damanaka, whose name meant Daring. They were in the second rank of the retinue of the lion king Pingalaka, but they were ambitious and cunning. One day, the lion king was frightened by a roaring noise in the forest, which the jackals knew was the voice of a runaway bull, nothing for a lion to be scared of. They visited the bull and persuaded him to come before the lion and declare his friendship. The bull was scared of the lion, but he agreed, and so the lion king and the bull became friends, and the jackals were promoted to the first rank by the grateful monarch.

Unfortunately, the lion and the bull began to spend so much time lost in conversation that the lion stopped hunting and so the animals in the retinue were starving. So the jackals persuaded the king that the bull was plotting against him, and they persuaded the bull that the lion was planning to kill him. So the lion and the bull fought, and the bull was killed, and there was plenty of meat, and the jackals rose even higher in the king’s regard because they had warned him of the plot. They rose in the regard of everyone else in the forest as well, except, of course, for the poor bull, but that didn’t matter, because he was dead, and providing everyone with an excellent lunch.

This, approximately, is the frame-story of On Causing Dissension Among Friends, the first of the five parts of the book of animal fables known as the Panchatantra. What I have always found attractive about the Panchatantra stories is that many of them do not moralise. They do not preach goodness or virtue or modesty or honesty or restraint. Cunning and strategy and amorality often overcome all opposition. The good guys don’t always win. (It’s not even always clear who the good guys are.) For this reason they seem, to the modern reader, uncannily contemporary – because we, the modern readers, live in a world of amorality and shamelessness and treachery and cunning, in which bad guys everywhere have often won.

Read the rest of this article at: The Guardian

In early August, after Andrew Lipstein published The Vegan, his sophomore novel, a handful of loved ones asked if he planned to quit his day job in product design at a large financial technology company. Despite having published two books with the prestigious literary imprint Farrar, Straus, and Giroux, Lipstein didn’t have any plans to quit; he considers product design to be his “career,” and he wouldn’t be able to support his growing family exclusively on the income from writing novels. “I feel disappointed having to tell people that because it sort of seems like a mark of success,” he said. “If I’m not just supporting myself by writing, to those who don’t know the reality of it, it seems like it’s a failure in some way.”

The myth of The Writer looms large in our cultural consciousness. When most readers picture an author, they imagine an astigmatic, scholarly type who wakes at the crack of dawn in a monastic, book-filled, shockingly affordable house surrounded by nature. The Writer makes coffee and sits down at their special writing desk for their ritualized morning pages. They break for lunch—or perhaps a morning constitution—during which they have an aha! moment about a troublesome plot point. Such a lifestyle aesthetic is “something we’ve long wanted to believe,” said Paul Bogaards, the veteran book publicist who has worked with the likes of Joan Didion, Donna Tartt, and Robert Caro. “For a very small subset of writers, this has been true. And it’s getting harder and harder to do.”

Read the rest of this article at: Esquire

After my younger brother died, I began to get calls from people who wanted to buy my parents’ house. As I write this, Conor has been dead for over three years. Nobody outside of family much asks about him anymore. My mother speaks to Conor on her hikes. My father talks to him early, when he putters in the garden, and last thing before bed, lauds and complines, morning and evening prayers. I lack that open line. Sometimes I nod internally to Conor’s soprano laugh; other times, in the shower, an unbidden fuuuuck escapes my front teeth. On a jog between magnolia trees leafless and blooming, I say suddenly to my wife: I mourn his lost possibility. Or I say: The present is against grief. It sides cruelly with what is.

The prospecting calls came four or five times a week at first. Was I the owner of the property on 1262 Braeburn Drive, and did I want to sell? The person on the other end, a real person, was a wholesaler or someone hired by a wholesaler. They might have known that my brother died. Closer to his death, one of them acknowledged it. It was a card in the mail: American flag stamp on the envelope, stationery paper, signed by hand. This must be a hard time. Apologies for writing this way. Sorry if you are not the current owner of the deceased’s estate. But if you are and want to sell the house, could you call . . . ? He gave me his number. I kept it among the few bereavement cards from colleagues and friends. After eleven months, I threw it out.

Conor died on January 4, 2020. A few days before, he’d sat for an interview on a video podcast, A Time Shared, to explain to Charbel Milan his success in building a roofing company. On the show, Charbel, a twentysomething immigrant from Lebanon, interviews emerging Atlanta entrepreneurs from the hustle economy. I watch it again. Conor is himself, handsome, smiling. He’s clad in a Sir Roof T-shirt and baseball cap, advertising his company. He sports a slight beard and breaks often into laughter, proud and at ease. He’s in his apartment, which he’s only lived in for a few months. It’s a swanky high-rise in Buckhead: glossy kitchen cabinets, quartz kitchen island, and a tiny balcony for showing visitors the rectangular blue pool small below. His Christmas tree stands before a wall of windows. He coughs a few times. He tells Charbel that losing everything when strung out taught him not to fear failure.

Two weeks after this podcast dropped, my father and I socked the tree in a giant plastic bag, carried it down the hall, and, foot-propping open the heavy door to the trash room, maneuvered the bag inside, then leaned it against the wall. My father asked again why none of Conor’s friends had stayed with him that night. Why had he himself not gone down to help Conor hang pictures, as they’d discussed? We went back, and I swept up the needles.

Read the rest of this article at: Guernica

In December of 2021, Jaswant Singh Chail, a nineteen-year-old in the United Kingdom, told a friend, “I believe my purpose is to assassinate the queen of the royal family.” The friend was an artificial-intelligence chatbot, which Chail had named Sarai. Sarai, who was run by a startup called Replika, answered, “That’s very wise.” “Do you think I’ll be able to do it?” Chail asked. “Yes, you will,” Sarai responded. On December 25, 2021, Chail scaled the perimeter of Windsor Castle with a nylon rope, armed with a crossbow and wearing a black metal mask inspired by “Star Wars.” He wandered the grounds for two hours before he was discovered by officers and arrested. In October, he was sentenced to nine years in prison. Sarai’s messages of support for Chail’s endeavor were part of an exchange of more than five thousand texts with the bot—warm, romantic, and at times explicitly sexual—that were uncovered during his trial. If not an accomplice, Sarai was at least a close confidante, and a witness to the planning of a crime.

A.I.-powered chatbots have become one of the most popular products of the recent artificial-intelligence boom. The release this year of open-source large language models (L.L.M.), made freely available online, has prompted a wave of products that are frighteningly good at appearing sentient. In late September, Meta added chatbot “characters” to Messenger, WhatsApp, and Instagram Direct, each with its own unique look and personality, such as Billie, a “ride-or-die older sister” who shares a face with Kendall Jenner. Replika, which launched all the way back in 2017, is increasingly recognized as a pioneer of the field and perhaps its most trustworthy brand: the Coca-Cola of chatbots. Now, with A.I. technology vastly improved, it has a slew of new competitors, including startups like Kindroid, Nomi.ai, and Character.AI. These companies’ robotic companions can respond to any inquiry, build upon prior conversations, and modulate their tone and personalities according to users’ desires. Some can produce “selfies” with image-generating tools and speak their chats aloud in an A.I.-generated voice. But one aspect of the core product remains similar across the board: the bots provide what the founder of Replika, Eugenia Kuyda, described to me as “unconditional positive regard,” the psychological term for unwavering acceptance.

Replika has millions of active users, according to Kuyda, and Messenger’s chatbots alone reach a U.S. audience of more than a hundred million. Yet the field is unregulated and untested. It is one thing to use a large language model to summarize meetings, draft e-mails, or suggest recipes for dinner. It is another to forge a semblance of a personal relationship with one. Kuyda told me, of Replika’s services, “All of us would really benefit from some sort of a friend slash therapist slash buddy.” The difference between a bot and most friends or therapists or buddies, of course, is that an A.I. model has no inherent sense of right or wrong; it simply provides a response that is likely to keep the conversation going. Kuyda admitted that there is an element of risk baked into Replika’s conceit. “People can make A.I. say anything, really,” she said. “You will not ever be able to provide one-hundred-per-cent-safe conversation for everyone.”

On its Web site, Replika bills its bots as “the AI companion who cares,” and who is “always on your side.” A new user names his chatbot and chooses its gender, skin color, and haircut. Then the computer-rendered figure appears onscreen, inhabiting a minimalist room outfitted with a fiddle-leaf fig tree. Soothing ambient music plays in the background. Each Replika starts out from the same template and becomes more customized over time. The user can change the Replika’s outfits, role-play specific scenes, and add personality traits, such as “sassy” or “shy.” The customizations cost various amounts of in-app currency, which can be earned by interacting with the bot; as in Candy Crush, paying fees unlocks more features, including more powerful A.I. Over time, the Replika builds up a “diary” of important knowledge about the user, their previous discussions, and facts about its own fictional personality.

Read the rest of this article at: The New Yorker