Amazon is getting worse, but you probably already knew that, because you probably shop at Amazon. The online retail behemoth’s search results are full of ads and sponsored results that can push actually relevant, well-reviewed options far down the page. The proportion of its inventory that comes from brands with names like Fkprorjv and BIDLOTCUE seems to be constantly expanding. Many simple queries yield results that appear to be the exact same product over and over again—sometimes with the exact same photos—but all with different names, sellers, prices, ratings, and customer reviews. If you squint, you can distinguish between some of the products, which feels like playing a decidedly less whimsical version of “spot the difference” picture games.

Last week, the journalist John Herrman published a theory on why, exactly, Amazon seems so uninterested in the faltering quality of its shopping experience: The company would rather leave the complicated, labor-intensive business of selling things to people to someone else. To do that, it has opened its doors to roughly 2 million third-party sellers, whether they are foreign manufacturers looking for more direct access to customers or the disciples of “grindset” influencers who want to use SEO hacks to fund the purchase of rental properties. In the process, Amazon has cultivated a decentralized, disorienting mess with little in the way of discernible quality control or organization. According to Herrman, that’s mainly because Amazon’s primary goal is selling the infrastructure of online shopping to other businesses—things like checkout, payment processing, and order fulfillment, which even large retailers can struggle to handle efficiently. Why be Amazon when you can instead make everyone else be Amazon and take a cut?

Read the rest of this article at: The Atlantic

In 2013, workers at a German construction company noticed something odd about their Xerox photocopier: when they made a copy of the floor plan of a house, the copy differed from the original in a subtle but significant way. In the original floor plan, each of the house’s three rooms was accompanied by a rectangle specifying its area: the rooms were 14.13, 21.11, and 17.42 square metres, respectively. However, in the photocopy, all three rooms were labelled as being 14.13 square metres in size. The company contacted the computer scientist David Kriesel to investigate this seemingly inconceivable result. They needed a computer scientist because a modern Xerox photocopier doesn’t use the physical xerographic process popularized in the nineteen-sixties. Instead, it scans the document digitally, and then prints the resulting image file. Combine that with the fact that virtually every digital image file is compressed to save space, and a solution to the mystery begins to suggest itself.

Compressing a file requires two steps: first, the encoding, during which the file is converted into a more compact format, and then the decoding, whereby the process is reversed. If the restored file is identical to the original, then the compression process is described as lossless: no information has been discarded. By contrast, if the restored file is only an approximation of the original, the compression is described as lossy: some information has been discarded and is now unrecoverable. Lossless compression is what’s typically used for text files and computer programs, because those are domains in which even a single incorrect character has the potential to be disastrous. Lossy compression is often used for photos, audio, and video in situations in which absolute accuracy isn’t essential. Most of the time, we don’t notice if a picture, song, or movie isn’t perfectly reproduced. The loss in fidelity becomes more perceptible only as files are squeezed very tightly. In those cases, we notice what are known as compression artifacts: the fuzziness of the smallest JPEG and MPEG images, or the tinny sound of low-bit-rate MP3s.

Read the rest of this article at: The New Yorker

In 2012, when Steven Soderbergh and Channing Tatum released “Magic Mike,” a moist, underlit caper about male entertainers at a Tampa strip club, they thought they were making an indie. Instead, the film grossed a hundred and sixty-seven million dollars, spawning an international franchise. Starring Tatum as a dancer who dreams of opening a furniture business, the film also laid out a surprisingly wholesome theory of the art of male striptease. Mike’s liberatory gyrations, which spread delight and empowerment wherever he slithers, became the basis for a sequel, “Magic Mike XXL”; a live stage show with runs in Las Vegas, Miami, London, and Berlin; and an unscripted HBO series, “Finding Magic Mike.” The final installment of the film trilogy, “Magic Mike’s Last Dance,” opens Friday.

“Magic Mike” is about capitalism. A thong is perhaps the least undignified costume that Tatum’s character, a six-year veteran of the Xquisite Male Dance Revue, dons to make ends meet: when he’s not at the club, he straps on a tool belt to lay roofing tile in dangerous heat, perches glasses on his nose to angle for a small-business loan, and keeps his dashboard dressed in protective plastic so that his car can be resold. His is a wincing, genuine precarity—the guy has a heart of gold, but calls sociology “social studies.” In the club, though, he’s king. The first film portrays the world of male stripping with a buoyant intimacy, through the lens of a dancer’s memory. (Tatum worked as a stripper when he was eighteen, and his experience inspired the script.) The men bitch about who choreographs their solos and hunch over sewing machines, repairing torn costumes. Mike would like to do something more worthwhile and lucrative, but he can’t. Although you cheer when he quits the show at the end of the first film, and at the end of the second, you don’t really blame him for coming back. Dancing does something to him that is potent and undeniable. The economics of the work are degrading, but the work isn’t: he goes onstage tense and comes off relaxed, covered in sweat.

Read the rest of this article at: The New Yorker

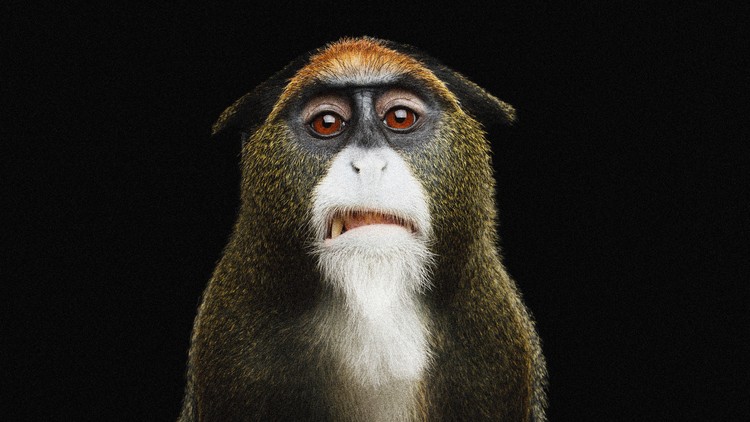

Eleven years ago, on the remote Japanese island of Kojima, a female macaque walked backwards into a stray heap of primate poop, glanced down at her foot, and completely flipped her lid. The monkey hightailed it down the shoreline on three feet, kicking up sand as she sprinted, until she reached a dead tree, where “she repeatedly rubbed her foot and smelled it until all of the sticky matter disappeared,” says Cécile Sarabian, a cognitive ecologist at the University of Hong Kong, who watched the incident unfold. Sarabian, then a graduate student studying parasite transmission among primates, was entranced by the familiarity of it all: the dismay, the revulsion, the frenetic desire for clean. It’s exactly what she or any other human might have done, had they accidentally stepped in it.

In the years following the event, Sarabian came to recognize the macaque’s panicked reaction as a form of disgust—just not the sort that many people first think of when the term comes to mind. Disgust has for decades been billed as a self-awareness of one’s own aversions, a primal emotion that’s so exclusive to people that, as some have argued, it may help define humanity itself. But many scientists, Sarabian among them, subscribe to a broader definition of disgust: the suite of behaviors that help creatures of all sorts avoid pathogens; parasites; and the flora, fauna, and substances that ferry them about. This flavor of revulsion—centered on observable actions, instead of conscious thought—is likely ancient and ubiquitous, not modern or unique to us. Which means disgust may be as old and widespread as infectious disease itself.

Researchers can’t yet say that disease-driven disgust is definitely universal. But so far, “in every place that it’s been looked for, it’s been found,” says Dana Hawley, an ecologist at Virginia Tech. Bonobos rebuff banana slices that have been situated too close to scat; scientists have spotted mother chimps wiping the bottoms of their young. Kangaroos eschew patches of grass that have been freckled with feces. Dik-diks—pointy-faced antelopes that weigh about 10 pounds apiece—sequester their waste in dunghills, potentially to avoid contaminating the teeny territories where they live. Bullfrog tadpoles flee from their fungus-infested pondmates; lobsters steer clear of crowded dens during deadly virus outbreaks. Nematodes, no longer than a millimeter, wriggle away from their dinner when they chemically sense that it’s been contaminated with bad microbes. Even dung beetles will turn their nose up at feces that seem to pose an infectious risk.

Read the rest of this article at: The Atlantic

A product race is under way in the world of artificial intelligence. Just this week, Google announced plans to release Bard, a search chatbot based on its proprietary large language model; yesterday, Microsoft held an event unveiling a next-generation web browser with a supercharged Bing interface powered by ChatGPT. Though most big tech companies have been quietly developing their own generative-AI tools for years, these giants are scrambling to demonstrate their chops after the public release and runaway adoption of OpenAI’s ChatGPT, which has accumulated more than 30 million users in two months.

OpenAI’s success is an apparent signal to tech leaders that deep-learning networks are the next frontier of the commercial internet. AI evangelists will similarly tell you that generative AI is destined to become the overlay for not only search engines, but also creative work, busywork, memo writing, research, homework, sketching, outlining, storyboarding, and teaching. It will, in this telling, remake and reimagine the world. At present, sorting the hype from genuine enthusiasm is difficult, but given the billions of dollars being funneled into this technology, it’s worth asking, in ways large and small: What does the world look like if the evangelists are right? If this AI paradigm shift arrives, one vital skill of the 21st century could be effectively talking to machines. And for now, that process involves writing—or, in tech vernacular, engineering—prompts.

Image-generating models such as DALL-E 2 and Midjourney and text-generation tools like ChatGPT market themselves as a means for creation. But in order to create, one must know how to guide the machines to a desired outcome. Asking ChatGPT to write a five-paragraph book report about Animal Farm will yield forgettable, even inaccurate results. But writing the introductory paragraph to the book report yourself and asking the tool to complete the essay will feed the machine valuable context. Better yet, instruct the machine, “Write a five-paragraph book report at a college level with elegant prose that draws on the history of the satirical allegorical novel Animal Farm. Reference Orwell’s ‘Why I Write’ while explaining the author’s stylistic choices in the novel.” It will yield a far more sophisticated and convincing output.

Read the rest of this article at: The Atlantic