In the past decade or so, there’s been a flowering of philosophical self-help—books authored by academics but intended to instruct us all. You can learn How to Be a Stoic, How to Be an Epicurean or How William James Can Save Your Life; you can walk Aristotle’s Way and go Hiking with Nietzsche. As of 2020, Oxford University Press has issued a series of “Guides to the Good Life”: short, accessible volumes that draw practical wisdom from historical traditions in philosophy, with entries on existentialism, Buddhism, Epicureanism, Confucianism and Kant.

In the interest of full disclosure: I’ve planted seeds in this garden myself. In 2017, I published Midlife: A Philosophical Guide, and five years later, Life is Hard: How Philosophy Can Help Us Find Our Way. Both could be shelved without injustice in the self-help section. But both exhibit some discomfort with that fact. When I wrote Midlife, on the heels of a midlife crisis—philosophy, which I had loved, felt hollow and repetitive, a treadmill of classes to teach and papers to write, with tenure a gilded cage—I adopted the conventions of the self-help genre partly tongue-in-cheek. The midlife crisis invites self-mockery, and I was happy to oblige: there’s respite to be found in laughing at oneself. If my options were to quit my job, have an extramarital affair or write a navel-gazing book, my wife and I were glad that I had chosen option three. I hope the book helped others too—but it never really faced up to the problems of its project.

Asking a professor of moral philosophy for life advice can seem quixotic, like asking an expert on the mind-body problem to perform brain surgery. Philosophy is an abstract field of argument and theory: this is true as much of ethics as it is of metaphysics. Why should reflection in this vein—ruthless, complex, conceptual—make us happier, more well-adjusted people? (If you’ve spent time with philosophers, you may doubt that it has such salutary effects.) And why should philosophers want to join the self-help movement, anyway?

Historians often trace the origins of self-help to 1859, when the aptly monikered Samuel Smiles published Self-Help: With Illustrations of Character and Conduct, a practical guide to self-improvement that became an international blockbuster. (The term itself derives from earlier writing by Thomas Carlyle and Ralph Waldo Emerson.)11. As Vladimir Trendafilov points out, Carlyle had used the phrase much earlier, in correspondence from 1822 and in fiction from 1831. According to Asa Briggs, Smiles took the phrase “self-help” from Emerson; but since Sartor Resartus was first published in America with a preface by Emerson in 1836, it is possible that Emerson took the phrase from Carlyle. Vladimir Trendafilov tracks Smiles’s use of the phrase instead to an unsigned editorial in the Leeds Times in 1836, written by Robert Nicoll: “Heaven helps those who help themselves, and self-help is the only effectual help.” Smiles inspired readers across the globe, from Nigeria to Japan. And he inspired imitators—thousands of them. Between his time and ours, self-help has grown into a multibillion-dollar industry.

Read the rest of this article at: The Point

Consider, before we begin, that not long ago, the grassy pitch of Riyadh’s Al-Awwal Park was desert, and even before that, it was underwater. Around 250 million years ago—give or take—much of what we call the Arabian Peninsula was submerged beneath an ancient sea that teemed with life: algae, diatoms, and sundry other prehistoric critters in their trillions. When these creatures died, their bodies littered the ocean floor and became trapped. Bedrock accreted. Tectonic plates drifted, smashed together. And, under pressure and heat and time, those organisms transformed into the substance we now know as crude oil.

Whole eras pass. The dinosaurs come and go. Continents break up, sea levels fall, and a new landmass rises from the waves, eventually giving way to an inhospitable desert. The resourceful Homo sapiens that do make it their home toil and quarrel until the early 20th century, when much of the territory falls into the hands of the warrior they call Ibn Saud, who proclaims his fiefdom the new Kingdom of Saudi Arabia. Then, fortune: Prospectors discover that the bygone ocean has left behind some of the richest oil-and-gas reserves anywhere on the planet, making the ruling family of this fledgling desert kingdom among the wealthiest human beings alive.

The point being that places change—slowly, continuously, and, on rare occasion, all at once. Oh, and that for the craziness of what follows to make sense, perhaps keep in mind that while money doesn’t grow on trees, it does, in a few places, flow freely from the earth.

Anyway. Present day. Where once was only rock and dirt now stands a state-of-the-art soccer stadium, and on this balmy Friday night in Riyadh—a game night in the Saudi Pro League—fans of the home side, Al-Nassr, are arriving en masse. Outside the stadium, a group of what might be the world’s most polite soccer ultras have formed a human tunnel and are singing songs, handing out candy and free jerseys. On the concourse, fans wearing yellow-and-blue scarves over traditional Saudi thobes and abayas queue for coffee and popcorn, chattering excitedly about the chance to see their new superstar. Even now, they can’t believe it: Cristiano Ronaldo—the five-time Champions League winner, and contender for soccer’s best-ever player, here! In Saudi Arabia!

“The GOAT! The Greatest of All Time, come to my favorite club?” Ghaida Khaled, an Al-Nassr fan, says, her eyes gleaming from behind her niqab. “History is written right now!”

The fans here in Saudi—like the wider soccer world—are still in disbelief over Ronaldo’s arrival, even a year on since the Portuguese forward left Europe to sign a contract reportedly worth over $200 million annually with Al-Nassr, a midsize club in the Saudi Pro League. While fading stars have been tempted to the Middle East over the years with offers of palaces and Scrooge McDuck money, none had ever been as celebrated, or as decorated. Whereas another player might have been written off as a 37-year-old chasing one last payday, this was something bigger.

Read the rest of this article at: GQ

We tell ourselves stories to feed our delusions. It’s an ugly world out there, and so many Americans prefer the easy way out. We genuflect to the guru and the influencer; we admire the charlatans who can captivate a crowd and turn a quick buck. We prefer the CliffsNotes to the book, and all the better if there’s a movie version. We talk around the obvious truths of our reality so doggedly that avoidance of and aversion to truth become the drivers of discourse. By the time we realize the easy way out is no way at all, we’re already fucked.

When I say “American” here, I’m not coding for “white.” Indeed, I mean American—we all suffer from this peculiar affliction to varying degrees. I probably am coding a bit for middle- and upper-middle-class folk regardless of race, as those who feel they have footing to lose are more apt to avoid anything that shakes the climbing wall.

The American aversion to reality and attendant addiction to delusion and spectacle have long been prevalent, as noted by myriad cultural critics. Didion declared “the dream was teaching the dreamers how to live”; Baldwin highlighted and decried the “illusion about America, the myth about America to which we are clinging which has nothing to do with the lives we lead.” The timeliness and applicability of their writing—every year I return to their essays, and many of the insights radiate with more urgency and relevance than ever—now strike me less as a marker of their brilliance and more an indictment of our collective cultural inertia.

Read the rest of this article at: Defector

cythians did terrible things. Two-thousand five-hundred years ago, these warrior nomads, who lived in the grasslands of what is now southern Ukraine, enjoyed a truly ferocious reputation. According to the Greek historian Herodotus, the Scythians drank the blood of their fallen enemies, took their heads back to their king and made trinkets out of their scalps. Sometimes, they draped whole human skins over their horses and used smaller pieces of human leather to make the quivers that held the deadly arrows for which they were famous.

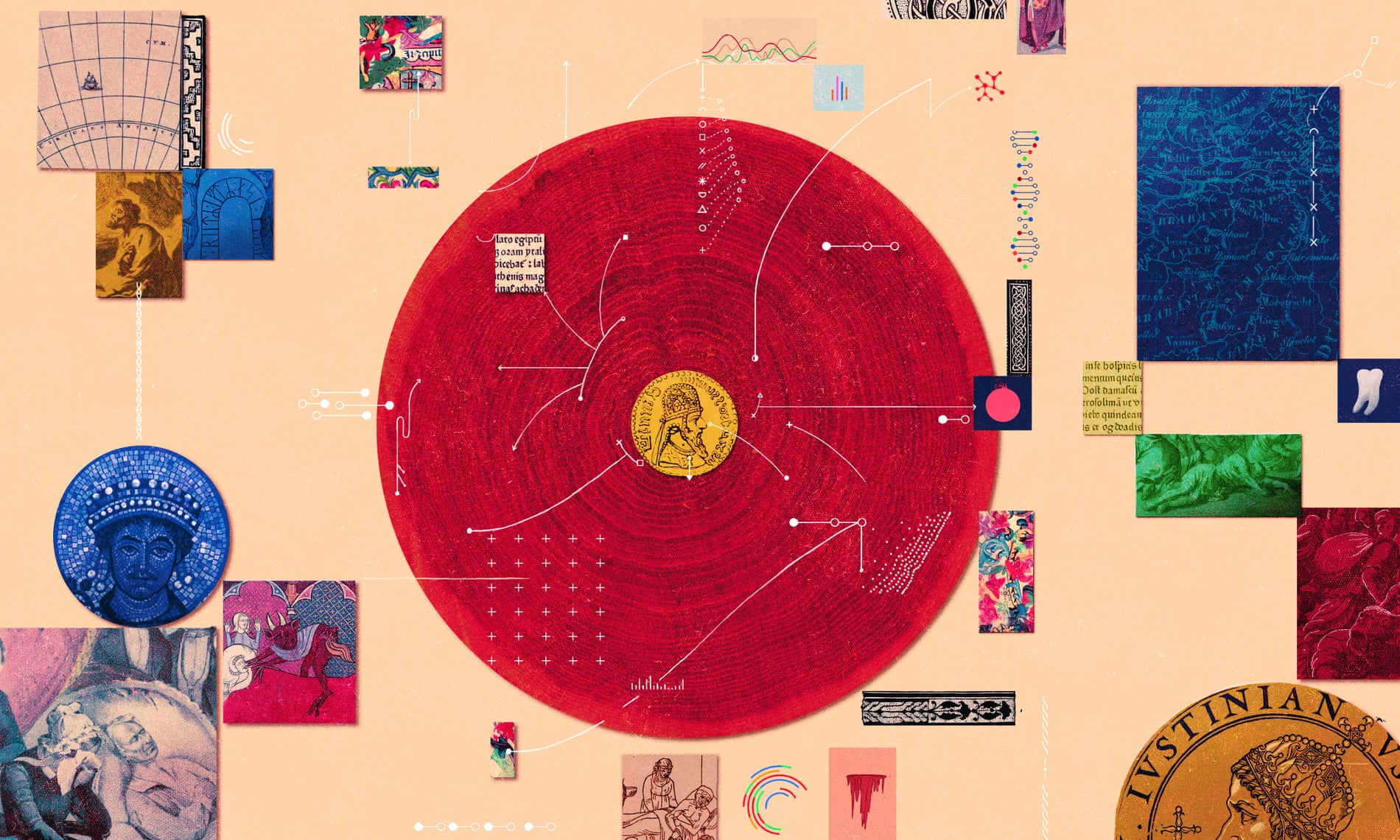

Readers have long doubted the truth of this story, as they did many of Herodotus’s more outlandish tales, gathered from all corners of the ancient world. (Not for nothing was the “father of history” also known as the “father of lies” in antiquity.) Recently, though, evidence has come to light that vindicates his account. In 2023, a team of scientists at the University of Copenhagen, led by Luise Ørsted Brandt, tested the composition of leather goods, including several quivers, recovered from Scythian tombs in Ukraine. By using a form of mass spectrometry, which let them read the “molecular barcode” of biological samples, the team found that while most of the Scythian leather came from sheep, goats, cows and horses, two of the quivers contained pieces of human skin. “Herodotus’s texts are sometimes questioned for their historical content, and some of the things he writes seem to be a little mythological, but in this case we could prove that he was right,” Brandt told me recently.

So, score one for Herodotus. But the hi-tech detective work by the Copenhagen researchers also points to something else about the future of history as a discipline. In its core techniques, history writing hasn’t changed that much since classical times. As a historian, you can do what Herodotus did – travel around talking to interesting people and gathering their recollections of events (though we now sometimes call this journalism). Or you can do what most of the historians that followed him did, which is to compile documents written in the past and about the past, and then try to make their different accounts and interpretations square up. That’s certainly the way I was trained in graduate school in the 2000s. We read primary texts written in the periods we studied, and works by later historians, and then tried to fit an argument based on those documents into a conversation conducted by those historians.

Read the rest of this article at: The Guardian

Most New Testament scholars agree that some 2,000 years ago a peripatetic Jewish preacher from Galilee was executed by the Romans, after a year or more of telling his followers about this world and the world to come. Most scholars – though not all.

But let’s stick with the mainstream for now: the Bible historians who harbour no doubt that the sandals of Yeshua ben Yosef really did leave imprints between Nazareth and Jerusalem early in the common era. They divide loosely into three groups, the largest of which includes Christian theologians who conflate the Jesus of faith with the historical figure, which usually means they accept the virgin birth, the miracles and the resurrection; although a few, such as Simon Gathercole, a professor at the University of Cambridge and a conservative evangelical, grapple seriously with the historical evidence.

Next are the liberal Christians who separate faith from history, and are prepared to go wherever the evidence leads, even if it contradicts traditional belief. Their most vocal representative is John Barton, an Anglican clergyman and Oxford scholar, who accepts that most Bible books were written by multiple authors, often over centuries, and that they diverge from history.

A third group, with views not far from Barton’s, are secular scholars who dismiss the miracle-rich parts of the New Testament while accepting that Jesus was, nonetheless, a figure rooted in history: the gospels, they contend, offer evidence of the main thrusts of his preaching life. A number of this group, including their most prolific member, Bart Ehrman, a Biblical historian at the University of North Carolina, are atheists who emerged from evangelical Christianity. In the spirit of full declaration, I should add that my own vantage point is similar to Ehrman’s: I was raised in an evangelical Christian family, the son of a ‘born-again’, tongues-talking, Jewish-born, Anglican bishop; but, from the age of 17, I came to doubt all that I once believed. Though I remained fascinated by the Abrahamic religions, my interest in them was not enough to prevent my drifting, via agnosticism, into atheism.

There is also a smaller, fourth group who threaten the largely peaceable disagreements between atheists, deists and more orthodox Christians by insisting that evidence for a historical Jesus is so flimsy as to cast doubt on his earthly existence altogether. This group – which includes its share of lapsed Christians – suggests that Jesus may have been a mythological figure who, like Romulus, of Roman legend, was later historicised.

But what is the evidence for Jesus’ existence? And how robust is it by the standards historians might deploy – which is to say: how much of the gospel story can be relied upon as truth? The answers have enormous implications, not just for the Catholic Church and for faith-obsessed countries like the United States, but for billions of individuals who grew up with the comforting picture of a loving Jesus in their hearts. Even for people like me, who dispensed with the God-soul-heaven-hell bits, the idea that this figure of childhood devotion might not have existed or, if he did, that we might know very little indeed about him, takes some swallowing. It involves a traumatic loss – which perhaps explains why the debate is so fraught, even among secular scholars.

Read the rest of this article at: Aeon